Container orchestration has become the backbone of modern cloud-native infrastructure in 2026. As organizations transition from monolithic applications to microservices architectures, the platform managing these distributed workloads fundamentally determines operational efficiency, scalability, and team productivity. While Kubernetes has captured 92% of the container orchestration market, Docker Swarm remains a viable alternative for specific use cases where simplicity trumps complexity. Whether you’re a student exploring DevOps fundamentals, a developer architecting scalable applications, or an IT leader evaluating infrastructure platforms, understanding the strategic trade-offs between Kubernetes and Docker Swarm is essential for making decisions that align technical capabilities with organizational readiness. This comprehensive guide examines both orchestration platforms through architectural, operational, and business perspectives to help you navigate one of the most consequential infrastructure choices facing modern development teams.

Container Orchestration Landscape in 2026

The container revolution has fundamentally transformed application deployment, with 92% of IT professionals now using Docker for containerization, representing the largest single-year technology adoption jump ever recorded. As containerized workloads proliferate, orchestration platforms that manage these containers across clusters become critical infrastructure. The decision between Kubernetes vs Docker Swarm for Container Orchestration now determines whether organizations access the full ecosystem of cloud-native tools or prioritize operational simplicity that accelerates time-to-production for smaller teams.

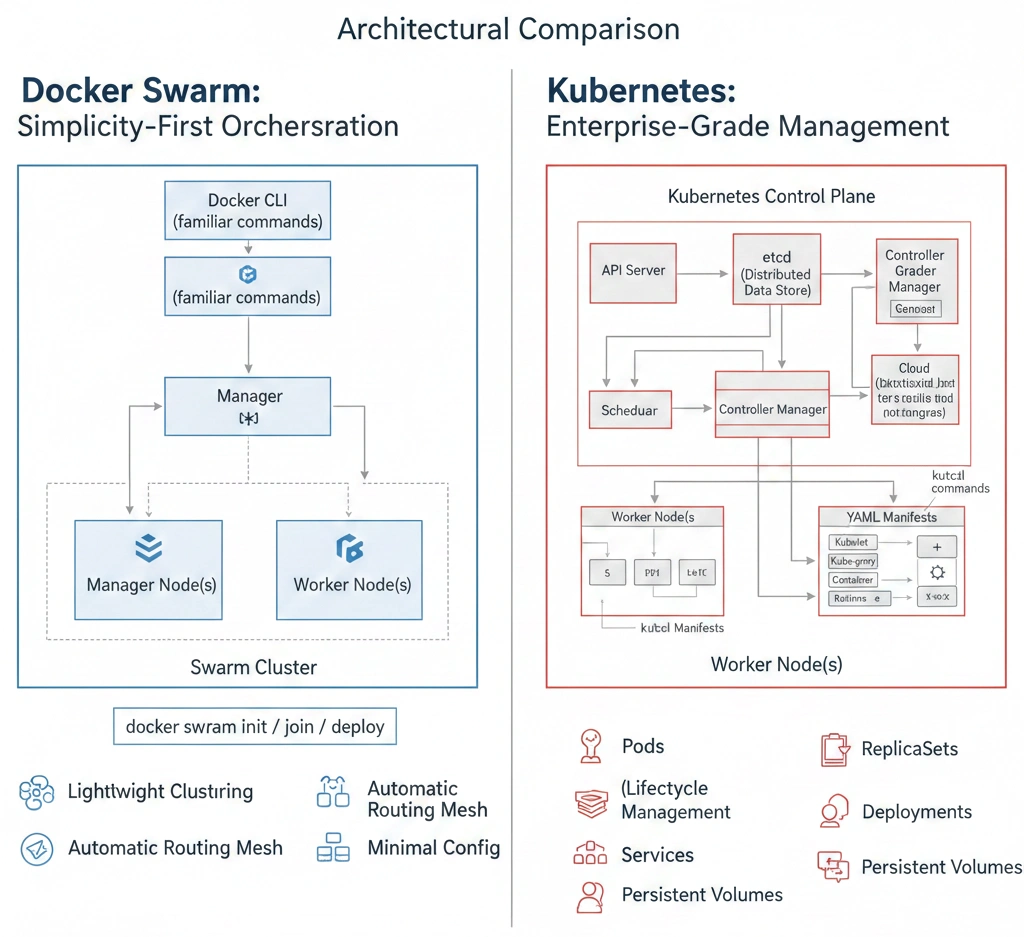

Docker Swarm: Simplicity-First Orchestration

Definition

Docker Swarm, also called Swarm Mode, is Docker’s native container orchestration tool built directly into the Docker Engine since version 1.12. It transforms a pool of Docker hosts into a single virtualized host, enabling service deployment across multiple machines without requiring separate orchestration software installation. Swarm maintains the same Docker CLI commands and API that developers already know, eliminating the learning curve associated with adopting new orchestration platforms. Unlike Kubernetes which introduces entirely new concepts and abstractions, Swarm extends familiar Docker workflows with clustering capabilities, making it the fastest path from single-host Docker to multi-host orchestration.

Advantages

- Zero additional learning: Uses identical Docker CLI commands developers already know, no new abstractions to master

- Instant setup: Initialize a swarm with single command “docker swarm init” requiring no complex configuration

- Built-in integration: Included with Docker Engine installation, no separate software or dependencies required

- Lightweight footprint: Minimal resource overhead compared to Kubernetes control plane requirements

- Automatic load balancing: Built-in routing mesh distributes traffic across service replicas without external tools

- Lower operational burden: Significantly reduced maintenance and troubleshooting complexity for small teams

Disadvantages

- Limited ecosystem: Fewer third-party tools, integrations, and community resources compared to Kubernetes

- No native autoscaling: Lacks horizontal pod autoscaling, vertical autoscaling, and cluster autoscaler capabilities

- Basic networking: Simplified networking model cannot match Kubernetes network policies and advanced configurations

- Stagnant development: Slower feature development velocity with smaller community contribution base

- Career limitations: Fewer job opportunities and market demand for Swarm expertise versus Kubernetes

- Scaling ceiling: Performance advantages diminish beyond 20 nodes as Kubernetes optimization matures

Docker Swarm Core Concepts:

Nodes: Docker hosts participating in the swarm, designated as managers handling orchestration or workers executing tasks. Services: Definitions of tasks to execute on nodes including image, replica count, port mapping, and update strategy. Furthermore, Tasks: Individual containers running as part of a service, scheduled and monitored by manager nodes. Additionally, Load Balancer: Automatic routing mesh distributing incoming connections across healthy service replicas. Moreover, Overlay Networks: Virtual networks spanning multiple hosts enabling secure container-to-container communication.

Kubernetes: Enterprise-Grade Platform

Definition

Kubernetes, often abbreviated K8s, is an open-source container orchestration platform originally developed by Google based on their internal Borg and Omega systems, now maintained by the Cloud Native Computing Foundation. Kubernetes provides comprehensive infrastructure for automating deployment, scaling, and management of containerized applications across clusters of machines. Unlike Docker Swarm’s simplicity-first philosophy, Kubernetes offers extensive features including sophisticated scheduling, advanced networking, built-in monitoring, automatic scaling, and rich ecosystem integration. Therefore, Kubernetes transforms container management from basic orchestration into programmable infrastructure-as-code enabling organizations to define, version-control, and automate every aspect of application lifecycle across diverse environments from on-premises data centers to multi-cloud deployments.

Advantages

- Industry standard: 92% market share ensures long-term viability, extensive documentation, and largest community

- Massive ecosystem: Thousands of tools, integrations, and extensions covering monitoring, security, networking, and storage

- Advanced autoscaling: Horizontal pod autoscaling, vertical pod autoscaling, and cluster autoscaler handle dynamic workloads

- Multi-cloud portability: Runs consistently across AWS EKS, Azure AKS, Google GKE, on-premises, and bare metal

- Enterprise features: Role-based access control, network policies, pod security standards, and comprehensive audit logging

- Career opportunities: High market demand for Kubernetes expertise with premium compensation

- Managed services: All major cloud providers offer managed Kubernetes reducing operational burden significantly

Disadvantages

- Steep learning curve: Complex concepts including pods, deployments, services, ingress, volumes, and networking require significant study

- Operational complexity: Requires dedicated platform team or managed service to operate reliably at scale

- Resource overhead: Control plane components consume substantial CPU and memory even for small clusters

- YAML complexity: Verbose configuration files become unwieldy for complex applications without templating tools

- Overkill for small apps: Simple applications suffer from unnecessary complexity when Kubernetes features go unused

- Higher costs: 30-50% more total operational expenses during first year due to tooling, training, and staffing requirements

Kubernetes Core Architecture:

Control Plane: Master nodes running API server, scheduler, controller manager, and etcd distributed datastore for cluster state. Pods: Smallest deployable units containing one or more containers sharing network namespace and storage volumes. In addition, Deployments: Controllers managing pod replicas enabling declarative updates and rollback capabilities. Moreover, Services: Abstract network endpoints providing stable IP addresses and DNS names for pod groups. Additionally, Ingress: HTTP/HTTPS routing layer exposing services externally with load balancing and TLS termination. Furthermore, Namespaces: Virtual clusters enabling multi-tenancy and resource isolation within single physical cluster.

Technical Architecture Deep Dive

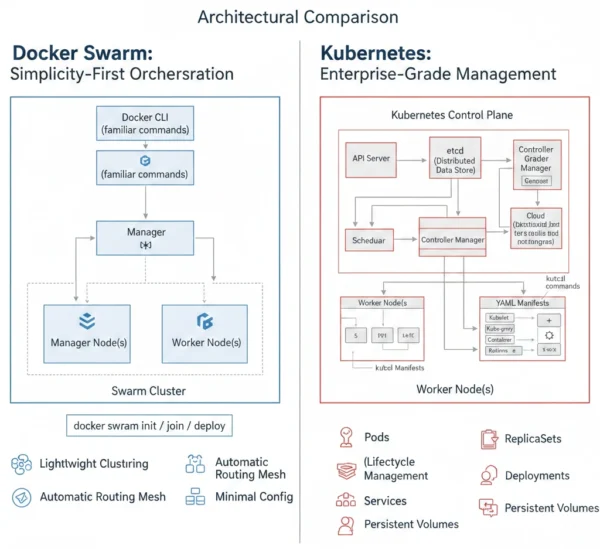

Docker Swarm Architecture

- Manager nodes using Raft consensus for distributed state management

- Worker nodes executing containers as directed by manager scheduling

- Overlay networks creating encrypted mesh connectivity between containers

- Routing mesh providing ingress load balancing across service replicas

- Internal DNS resolver mapping service names to container IP addresses

- Secrets management encrypting sensitive data at rest and in transit

- Rolling update mechanism enabling zero-downtime service deployments

Kubernetes Architecture

- API server providing RESTful interface for all cluster operations

- etcd distributed key-value store maintaining cluster configuration state

- Scheduler assigning pods to nodes based on resource requirements and constraints

- Controller manager running control loops ensuring desired state matches actual state

- Kubelet agent on each node managing pod lifecycle and reporting status

- Container runtime interface supporting Docker, containerd, CRI-O implementations

- CNI plugins enabling flexible network topology and policy enforcement

Deployment Workflow Comparison

Docker Swarm Deployment

- Initialize swarm on first manager: docker swarm init

- Join additional nodes using provided token commands

- Create service from image: docker service create

- Swarm automatically schedules tasks across available nodes

- Routing mesh exposes service on all node IP addresses

- Update service with new image: docker service update

- Swarm performs rolling update maintaining availability

Kubernetes Deployment

- Install and configure Kubernetes cluster using kubeadm or managed service

- Create deployment YAML defining pods, replicas, and container specifications

- Apply configuration: kubectl apply -f deployment.yaml

- Scheduler assigns pods to nodes based on resource availability

- Create service exposing deployment through stable IP and DNS

- Configure ingress controller for external HTTP/HTTPS routing

- Update deployment with new image triggering automatic rollout

Networking Models

| Networking Aspect | Docker Swarm | Kubernetes |

|---|---|---|

| Network Model | Overlay networks with routing mesh for ingress | Pod networking with CNI plugins and service abstraction |

| Service Discovery | Built-in DNS mapping service names to VIP addresses | CoreDNS providing service discovery within namespaces |

| Load Balancing | Automatic routing mesh distributing traffic across replicas | Service types including ClusterIP, NodePort, LoadBalancer |

| Network Policies | Not supported, relies on overlay network isolation | NetworkPolicy resources defining pod-to-pod firewall rules |

| Ingress | Basic ingress through published ports on routing mesh | Ingress controllers providing advanced HTTP routing and TLS |

Use Cases and Deployment Scenarios

When to Choose Docker Swarm

- Small teams (2-10 developers): Organizations lacking dedicated platform engineering resources

- Simple applications: Stateless microservices without complex networking or scaling requirements

- Proof of concepts: Rapid prototyping and experimentation before committing to production platform

- Edge deployments: Resource-constrained environments where Kubernetes overhead is prohibitive

- Docker familiarity: Teams with strong Docker expertise but limited time for Kubernetes learning

- Low-maintenance preference: Organizations prioritizing operational simplicity over feature richness

When to Choose Kubernetes

- Enterprise scale: Organizations running hundreds of services across dozens or hundreds of nodes

- Complex requirements: Applications needing advanced networking, storage orchestration, or security policies

- Multi-cloud strategy: Workloads running across AWS, Azure, Google Cloud, or on-premises infrastructure

- Dynamic workloads: Applications with variable traffic requiring horizontal and vertical autoscaling

- Platform engineering teams: Organizations with dedicated SRE or platform teams managing infrastructure

- Ecosystem requirements: Need for extensive tooling in monitoring, security, service mesh, or GitOps

Industry Adoption Patterns

| Industry | Docker Swarm Use Cases | Kubernetes Use Cases |

|---|---|---|

| Startups & SMBs | MVP development, bootstrapped teams, cost-conscious deployments | VC-funded growth stage requiring rapid scaling and enterprise features |

| Manufacturing | Factory floor edge computing, OT network deployments, air-gapped systems | Cloud-connected IoT platforms, predictive maintenance analytics |

| Financial Services | Internal tools, development environments, non-customer-facing workloads | Trading platforms, customer portals, regulatory compliance workloads |

| Healthcare | Clinic management systems, small practice deployments | Hospital EHR systems, telemedicine platforms, HIPAA-compliant applications |

| E-commerce | Internal admin tools, staging environments | Customer-facing stores, payment processing, inventory management |

12 Critical Differences: Kubernetes vs Docker Swarm

Aspect | Docker Swarm | Kubernetes |

|---|---|---|

| Learning Curve | Minimal learning required, uses familiar Docker commands and concepts | Steep learning curve requiring mastery of pods, services, deployments, ingress, volumes |

| Installation Complexity | Single command “docker swarm init” activates orchestration instantly | Complex installation requiring kubeadm, managed service, or distribution-specific tools |

| Market Share | 2.5-5% adoption, niche player with stable but small user base | 92% market dominance, de facto industry standard with massive ecosystem |

| Autoscaling | No native autoscaling, requires external scripts monitoring metrics | Horizontal pod autoscaling, vertical pod autoscaling, cluster autoscaler built-in |

| Networking | Simple overlay networks with automatic routing mesh for ingress | Complex CNI plugins, network policies, service mesh integration capabilities |

| Ecosystem Size | Limited third-party tools and integrations compared to Kubernetes | Thousands of certified tools, operators, and integrations spanning monitoring to security |

| High Availability | Manager quorum using Raft consensus, recommend 3-7 managers | Multi-master setups with etcd replication, sophisticated failure handling |

| Resource Overhead | Minimal control plane overhead, efficient for small clusters under 20 nodes | Substantial control plane requirements consuming CPU and memory even for small deployments |

| Configuration | Docker Compose YAML files, familiar format for Docker users | Kubernetes manifests with different structure, or Helm charts for templating |

| Cloud Provider Support | No managed Swarm services, self-managed deployment required | All major clouds offer managed Kubernetes: EKS, AKS, GKE |

| Job Market Demand | Limited career opportunities, smaller skill premium in job market | High demand for Kubernetes expertise with premium compensation |

| Long-term Viability | Stable through 2030 per Mirantis commitment but limited growth trajectory | Strong momentum with continuous innovation and expanding adoption |

Implementation and Migration Strategy

Getting Started: Platform Selection

- Team Assessment: First, evaluate current Docker expertise, available learning time, and whether dedicated platform engineering resources exist.

- Application Profile: Then, analyze workload complexity, scaling requirements, networking needs, and whether advanced features like autoscaling are essential.

- Scale Projection: Additionally, project cluster size over 2-3 years considering node count, service count, and expected traffic growth patterns.

- Ecosystem Requirements: Furthermore, identify must-have integrations for monitoring, logging, security, service mesh, or CI/CD pipelines.

- Cloud Strategy: Subsequently, determine whether multi-cloud portability matters or if single cloud provider with managed Kubernetes acceptable.

- Risk Tolerance: Finally, assess comfort with cutting-edge technology versus preference for stable, proven solutions with minimal change.

Migration Path: Swarm to Kubernetes

Phase 1: Preparation (Weeks 1-4)

- Audit current Swarm services and Docker Compose configurations

- Identify dependencies, persistent volumes, and secrets requiring migration

- Select Kubernetes distribution: managed service vs self-hosted

- Train team on Kubernetes fundamentals through courses and documentation

- Set up development Kubernetes cluster for experimentation

Phase 2: Conversion (Weeks 5-8)

- Convert Docker Compose files to Kubernetes manifests using Kompose

- Manually adjust generated YAML for Kubernetes best practices

- Recreate persistent volumes using StorageClasses and PersistentVolumeClaims

- Migrate secrets and configuration into Kubernetes ConfigMaps and Secrets

- Test applications thoroughly in development Kubernetes environment

Phase 3: Migration (Weeks 9-12)

- Deploy production Kubernetes cluster with proper sizing and high availability

- Migrate services incrementally starting with non-critical workloads

- Run Swarm and Kubernetes in parallel during transition period

- Implement monitoring, logging, and observability in Kubernetes

- Decommission Swarm cluster after validating all services running stable

Implementation Best Practices

Success Factors

- Start with Docker Swarm for prototypes, consider Kubernetes when scaling or complexity increases

- Use managed Kubernetes services (EKS, AKS, GKE) to reduce operational burden significantly

- Invest heavily in team training before production Kubernetes deployment avoiding costly mistakes

- Implement GitOps practices using tools like ArgoCD or Flux for declarative infrastructure

- Establish monitoring and observability from day one using Prometheus, Grafana, and distributed tracing

- Build platform engineering capabilities gradually rather than overwhelming team with complexity

Common Pitfalls

- Never adopt Kubernetes without clear business justification beyond resume-driven development

- Avoid underestimating Kubernetes learning curve, budget months not weeks for team proficiency

- Don’t deploy production Kubernetes without proper monitoring, logging, and disaster recovery

- Resist temptation to build custom platform, leverage managed services and existing tools

- Never migrate everything simultaneously, incremental transition reduces risk substantially

- Don’t ignore security, implement RBAC, network policies, and pod security standards from start

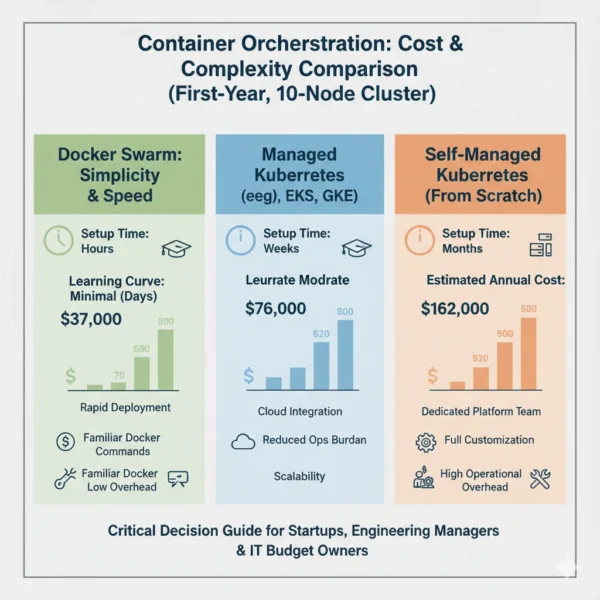

Cost, Complexity and Learning Curve Analysis

Initial Setup Time

Docker Swarm: Hours to get first cluster running

Kubernetes: Days to weeks for proper production setup

Learning Investment

Docker Swarm: 1-2 days for Docker users

Kubernetes: 2-3 months to operational proficiency

Operational Overhead

Docker Swarm: Minimal ongoing maintenance

Kubernetes: Requires dedicated platform team or managed service

Total Cost of Ownership: 10-Node Cluster First Year

| Cost Component | Docker Swarm | Kubernetes (Self-Managed) | Kubernetes (Managed EKS/AKS/GKE) |

|---|---|---|---|

| Infrastructure | $12,000 | $15,000 | $18,000 (includes control plane fees) |

| Training & Learning | $2,000 | $15,000 | $10,000 |

| Operational Labor | $20,000 (part-time) | $120,000 (full-time SRE) | $40,000 (part-time oversight) |

| Tooling & Monitoring | $3,000 | $12,000 | $8,000 |

| Total First Year | $37,000 | $162,000 | $76,000 |

| Cost Difference | Baseline | +338% vs Swarm | +105% vs Swarm |

While Kubernetes costs substantially more initially, the value proposition shifts at scale. Organizations managing 50+ nodes, complex networking requirements, or mission-critical workloads find Kubernetes ecosystem benefits justify increased costs. Docker Swarm remains economically superior for small teams, simple applications, and clusters under 20 nodes where operational simplicity outweighs advanced features. Managed Kubernetes services like EKS, AKS, and GKE dramatically reduce operational complexity making Kubernetes viable for mid-sized teams without platform engineering expertise, though at premium pricing versus self-managed Swarm.

Complexity Dimensions Comparison

Docker Swarm Complexity Profile

- Installation: Single command, no separate components to install

- Configuration: Docker Compose YAML format developers already understand

- Networking: Automatic overlay networks, built-in routing mesh

- Service Discovery: Transparent DNS resolution using service names

- Debugging: Standard Docker logs and inspect commands work identically

- Upgrades: Rolling updates through simple docker service update commands

Kubernetes Complexity Profile

- Installation: Complex with kubeadm, managed services, or distributions like Rancher

- Configuration: Kubernetes manifests with different structure requiring learning

- Networking: CNI plugins, network policies, service types, ingress controllers

- Service Discovery: CoreDNS, service abstractions, endpoint slices

- Debugging: kubectl commands, pod logs, describe, events, troubleshooting techniques

- Upgrades: Complex orchestration across control plane and worker nodes

Strategic Decision Framework

The Right Tool for the Right Job

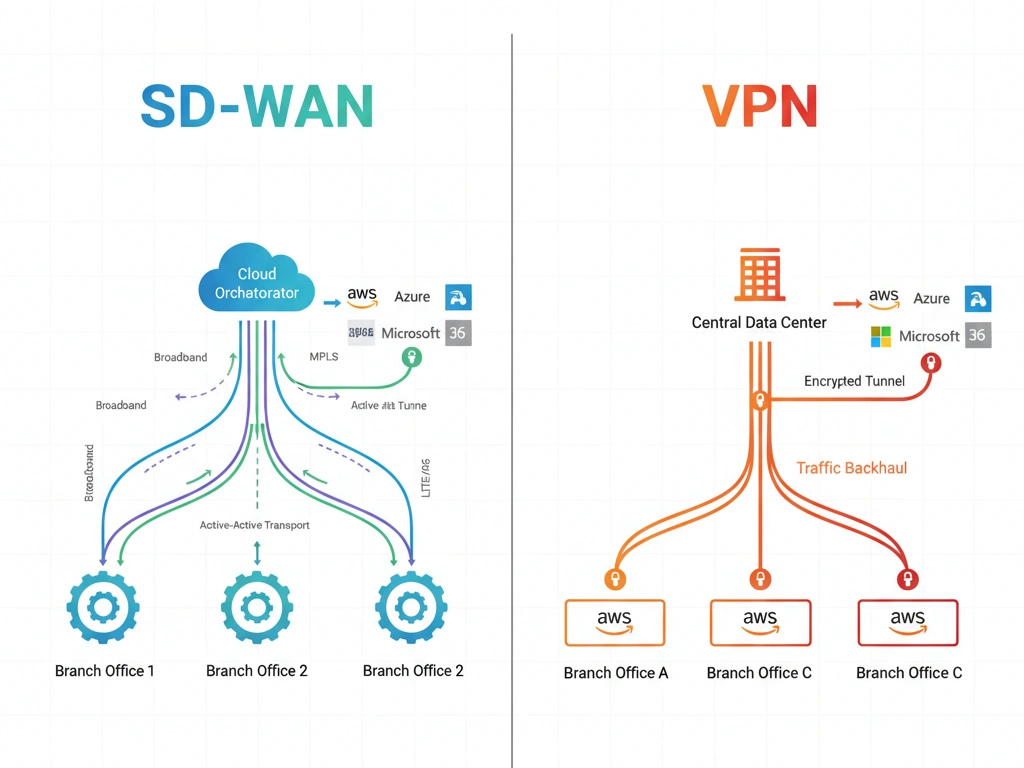

Container orchestration platform selection should align with organizational readiness, application requirements, and long-term strategy rather than following trends. Similar to how SD-WAN and VPN serve different network connectivity needs, Docker Swarm and Kubernetes excel in distinct scenarios where their strengths match specific constraints. Organizations achieve optimal outcomes by honestly assessing current capabilities and choosing platforms that amplify team productivity rather than introducing unnecessary complexity that impedes delivery.

Decision Matrix

| Decision Factor | Choose Docker Swarm When… | Choose Kubernetes When… |

|---|---|---|

| Team Size | 2-10 developers without dedicated platform team | 10+ engineers with dedicated SRE or platform engineering |

| Cluster Scale | Under 20 nodes with 10-50 services | 20+ nodes with 50+ services requiring orchestration |

| Application Complexity | Simple stateless services with basic networking | Complex microservices with advanced networking and storage |

| Scaling Requirements | Manual scaling acceptable, predictable traffic patterns | Dynamic autoscaling required for variable workloads |

| Cloud Strategy | Single cloud or on-premises deployment | Multi-cloud portability or cloud-agnostic architecture |

| Timeline | Need production deployment within days or weeks | Can invest months in setup, training, and optimization |

| Budget | Cost-conscious, limited infrastructure budget | Budget for tooling, training, managed services, or staffing |

| Long-term Vision | Stable architecture without planned major growth | Rapid growth expected, future-proofing infrastructure |

Hybrid Approaches and Progressive Adoption

Start Simple, Scale Smart

Many successful organizations begin with Docker Swarm for initial product development and proof-of-concept validation:

- Launch MVP on Swarm achieving production deployment within weeks

- Focus engineering effort on product features rather than infrastructure

- Validate product-market fit without premature platform investment

- Migrate to Kubernetes when scale, complexity, or team size justifies transition

- Leverage Docker expertise built during Swarm phase for Kubernetes adoption

Strategic Kubernetes Adoption

Organizations with platform engineering resources can start with Kubernetes from day one when:

- Team already possesses Kubernetes expertise from previous roles

- Application architecture requires Kubernetes features from inception

- Budget allows managed Kubernetes eliminating operational complexity

- Avoiding future migration complexity justifies upfront investment

- Competitive pressure demands rapid scaling capability immediately

Frequently Asked Questions: Kubernetes vs Docker Swarm

Making Strategic Orchestration Decisions in 2026

The choice between Kubernetes vs Docker Swarm for Container Orchestration transcends simple technical comparison, representing strategic decision about infrastructure philosophy, team investment, and long-term platform evolution. Both orchestrators deliver production-ready container management when deployed appropriately, and optimal selection aligns platform capabilities with organizational maturity, application requirements, and growth trajectory.

Choose Docker Swarm When:

- Team has strong Docker expertise but limited orchestration experience

- Application requires basic orchestration without advanced features

- Cluster size will remain under 20 nodes with 10-50 services

- Operational simplicity valued over ecosystem breadth

- Budget constraints prevent Kubernetes training and tooling investment

- Time-to-production measured in days or weeks not months

Choose Kubernetes When:

- Enterprise scale with dozens of services across numerous nodes

- Advanced features like autoscaling, network policies, or service mesh required

- Multi-cloud portability or cloud-agnostic architecture is strategic priority

- Dedicated platform engineering team exists or managed service budget available

- Long-term infrastructure investment justified by business growth projections

- Ecosystem integration with monitoring, security, and DevOps tools is essential

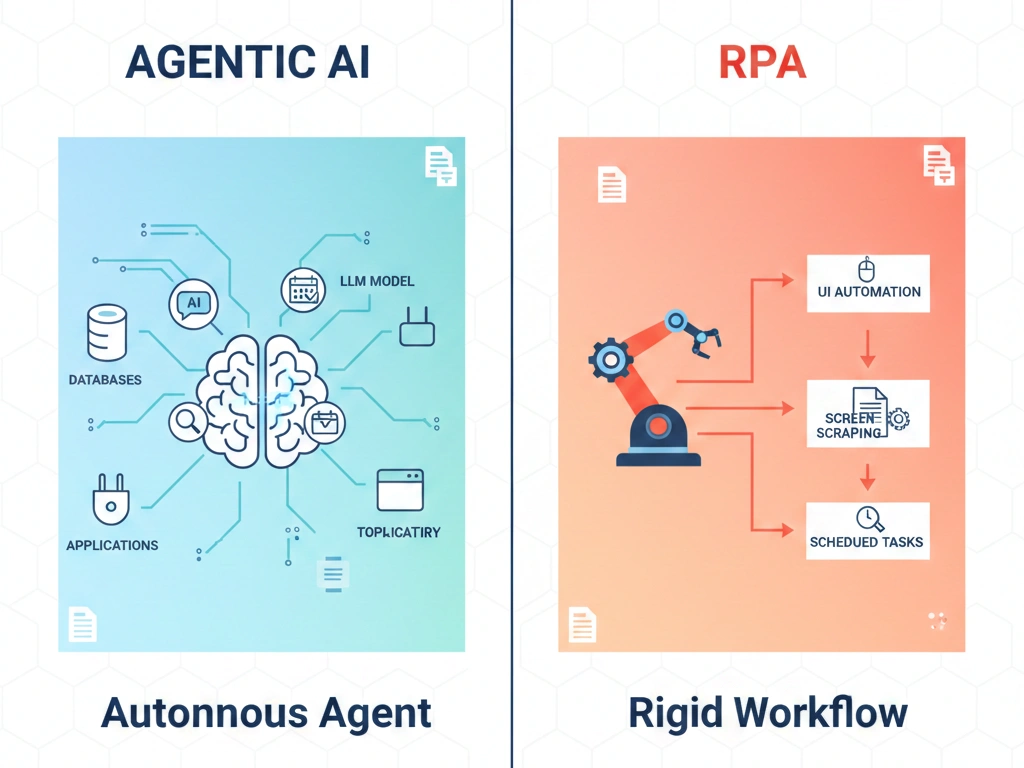

Strategic Recommendation for 2026:

Evaluate container orchestration needs honestly without succumbing to resume-driven development or fear of missing out. Just as intelligent automation requires matching technology sophistication to problem complexity, container orchestration platforms should align with actual requirements rather than perceived industry mandates. Small teams building simple applications gain nothing from Kubernetes complexity beyond resume keywords, while enterprises running mission-critical workloads at scale find Docker Swarm limiting despite operational simplicity advantages. Consider starting with Docker Swarm for proof-of-concept and MVP validation, migrating to Kubernetes when scale, complexity, or team growth justify investment. Alternatively, leverage managed Kubernetes services eliminating operational burden if budget permits and team possesses baseline expertise. Organizations succeeding long-term choose platforms matching current capabilities while building expertise supporting future growth rather than prematurely adopting infrastructure they cannot properly operate.

The container orchestration landscape in 2026 rewards pragmatic platform selection over trend-following. Whether you’re a student learning DevOps fundamentals, a developer architecting microservices, or an IT leader building cloud-native infrastructure, understanding that Docker Swarm and Kubernetes serve different points on complexity-versus-simplicity spectrum enables informed decisions balancing team productivity, operational costs, and technical requirements. Your competitive advantage comes not from orchestrator brand name but from operational excellence executing chosen platform effectively while delivering business value through reliable, scalable application infrastructure.

Related Topics Worth Exploring

Container Security Best Practices

Discover security strategies for containerized applications including image scanning, runtime protection, and network segmentation across orchestration platforms.

Service Mesh Architecture

Learn how service mesh technologies like Istio and Linkerd provide advanced traffic management, observability, and security for microservices.

GitOps and Infrastructure as Code

Explore declarative infrastructure management using Git as single source of truth for Kubernetes and container orchestration configurations.